Ambari是什么

Ambari 自身也是一个分布式架构的软件,主要由两部分组成:Ambari Server 和 Ambari Agent。简单来说,用户通过 Ambari Server 通知 Ambari Agent 安装对应的软件;Agent会定时地发送各个机器每个软件模块的状态给 Ambari Server,最终这些状态信息会呈现在Ambari的GUI,方便用户了解到集群的各种状态,并进行相应的维护。

Ambari 安装部署 环境准备

版本选择ambari-2.7.3、HDP-3.1.0.0

1、安装包下载(主节点) wget http://public-repo-1.hortonworks.com/ambari/centos7/2.x/updates/2.7.3.0/ambari-2.7.3.0-centos7.tar.gz

2、配置Java环境(所有节点) # jdk自行下载 # 设置环境变量 # 环境变量生效 # 查看Java版本

3、设置hostname、hosts (所有节点) # 设置hostname # 设置 vim /etc/sysconfig/network

4、时钟同步(所有节点)

每台机器都要时钟同步

# 安装ntpdate # 单台机器时钟同步、且机器要与外网ping通 # 时钟同步脚本

5、修改文件打开限制(所有节点) vim /etc/security/limits.conf

6、关闭防火墙(所有节点) systemctl stop firewalld.service# 修改配置文件

7、SSH无密码登陆(主节点) # 配置hadoop15节点无密码登录到其他节点,在hadoop15节点上操作 # 验证ssh免密是否生效 # 拷贝密钥(后续需要使用) # 设置umask # 解释:022表示默认创建新文件权限为755 也就是 rxwr-xr-x(所有者全部权限,属组读写,其它人读写) # 详细文档:https://www.cnblogs.com/walblog/articles/7903319.html

8、 安装mysql 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 # 安装mysql源,centos7默认不带mysql安装源 # 启动mysql # 创建root管理员 # 登录 # 设置远程访问 # (注意:设置这个之后,在本地直接访问时,老是登录不上,但是使用远程就可以登录上,原因:mysql中一个用户名为空的访问本地权限的字段,所有登陆时优先匹配了这一条,就无法登陆了。 # 删除用户即可

修改yum源,实现离线安装 1、安装httpd服务(主服务器) yum -y install httpd

2、将上面下载的包放到/var/www/html目录下(主服务器) root@hadoop15 ~]$ mkdir /var/www/html/hdp 在http服务默认目录下添加目录# 上传之前下载包

遇到系统盘空间比较小的,可以修改/val/www/html所在的路径

执行vim /etc/ httpd/conf/ httpd.conf指令"/var/www/html" 这一段 #apache的根目录,把/var/ www/html 这个目录改为/ data(自己想要设置的目录)

再找到 <Directory "/var/www/html"> #定义apache /var/www/html这个区域,把 /var/www/html改成/data(自己想要设置的目录)

重启httpd服务

如果成功的话,可以看到 安装的文件

# 检查是否访问成功

4、制作本地源 安装本地源制作相关工具(主服务器)@hadoop15 hdp]yum install yum-utils createrepo yum-plugin-priorities -y@hadoop15 hdp]# createrepo ./

5、修改文件里面的源地址(主服务器)

1、打开repo# VERSION_NUMBER=2.7.3.0-139 # json.url = http://public-repo-1.hortonworks.com/HDP/hdp_urlinfo.json

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 1、打开repo# VERSION_NUMBER=3.1.0.0-78

yum clean all

6、脚本分发到子节点

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 # !/bin/bash # 1 获取输入参数个数,如果没有参数,直接退出 # 2 获取文件名称 # 3 获取上级目录到绝对路径 # 4 获取当前用户名称 # 5 循环 # 添加权限 chmod 777 xsync

# 切记到yum.repos.d 目录下去分发repo文件

安装Ambari-server

yum -y install ambari-server

给ambari、oozie、ranger、rangerkms、superse配置mysql元数据

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 CREATE DATABASE ambari; use ambari; CREATE USER 'ambari' @'%' IDENTIFIED BY 'ambari' ; GRANT ALL PRIVILEGES ON *.* TO 'ambari' @'%' ; CREATE USER 'ambari' @'localhost' IDENTIFIED BY 'ambari' ; GRANT ALL PRIVILEGES ON *.* TO 'ambari' @'localhost' ; CREATE USER 'ambari' @'hadoop15' IDENTIFIED BY 'ambari' ; GRANT ALL PRIVILEGES ON *.* TO 'ambari' @'hadoop15' ; FLUSH PRIVILEGES ; show tables ; use mysql; select Host,User ,Password from user where user ='ambari' ; CREATE DATABASE hive; use hive; CREATE USER 'hive' @'%' IDENTIFIED BY 'hive' ; GRANT ALL PRIVILEGES ON *.* TO 'hive' @'%' ; CREATE USER 'hive' @'localhost' IDENTIFIED BY 'hive' ; GRANT ALL PRIVILEGES ON *.* TO 'hive' @'localhost' ; CREATE USER 'hive' @'hadoop15' IDENTIFIED BY 'hive' ; GRANT ALL PRIVILEGES ON *.* TO 'hive' @'hadoop15' ; FLUSH PRIVILEGES ; CREATE DATABASE oozie; use oozie; CREATE USER 'oozie' @'%' IDENTIFIED BY 'oozie' ; GRANT ALL PRIVILEGES ON *.* TO 'oozie' @'%' ; CREATE USER 'oozie' @'localhost' IDENTIFIED BY 'oozie' ; GRANT ALL PRIVILEGES ON *.* TO 'oozie' @'localhost' ; CREATE USER 'oozie' @'hadoop15' IDENTIFIED BY 'oozie' ; GRANT ALL PRIVILEGES ON *.* TO 'oozie' @'hadoop15' ; FLUSH PRIVILEGES ;DROP DATABASE rangdb;CREATE DATABASE rangdb; use rangdb; CREATE USER 'rangdb' @'%' IDENTIFIED BY 'rangdb' ; GRANT ALL PRIVILEGES ON *.* TO 'rangdb' @'%' ; CREATE USER 'rangdb' @'localhost' IDENTIFIED BY 'rangdb' ; GRANT ALL PRIVILEGES ON *.* TO 'rangdb' @'localhost' ; CREATE USER 'rangdb' @'hadoop15' IDENTIFIED BY 'rangdb' ; GRANT ALL PRIVILEGES ON *.* TO 'rangdb' @'hadoop15' ; FLUSH PRIVILEGES ;DROP DATABASE rangerkms;CREATE DATABASE rangerkms; use rangerkms; CREATE USER 'rangerkms' @'%' IDENTIFIED BY 'rangerkms' ; GRANT ALL PRIVILEGES ON *.* TO 'rangerkms' @'%' ; CREATE USER 'rangerkms' @'localhost' IDENTIFIED BY 'rangerkms' ; GRANT ALL PRIVILEGES ON *.* TO 'rangerkms' @'localhost' ; CREATE USER 'rangerkms' @'hadoop15' IDENTIFIED BY 'rangerkms' ; GRANT ALL PRIVILEGES ON *.* TO 'rangerkms' @'hadoop15' ; FLUSH PRIVILEGES ;DROP DATABASE superset;CREATE DATABASE superset; use superset; CREATE USER 'superset' @'%' IDENTIFIED BY 'superset' ; GRANT ALL PRIVILEGES ON *.* TO 'superset' @'%' ; CREATE USER 'superset' @'localhost' IDENTIFIED BY 'superset' ; GRANT ALL PRIVILEGES ON *.* TO 'superset' @'localhost' ; CREATE USER 'superset' @'hadoop15' IDENTIFIED BY 'superset' ; GRANT ALL PRIVILEGES ON *.* TO 'superset' @'hadoop15' ; FLUSH PRIVILEGES ;

安装mysql驱动、创建mysql与ambari-server的连接

wget https://dev.mysql.com/get/Downloads/Connector-J/mysql-connector-java-5.1.46.zip# 给ambari添加驱动路径

初始化设置ambari-server并启动 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 [root@hadoop15 ~]# ambari-server setup

安装ambari agent

yum install ambari-agent -y

# 打开 # 改为控制节点的机器名(IP也可)所以机器的hostname都要配置为master节点的、

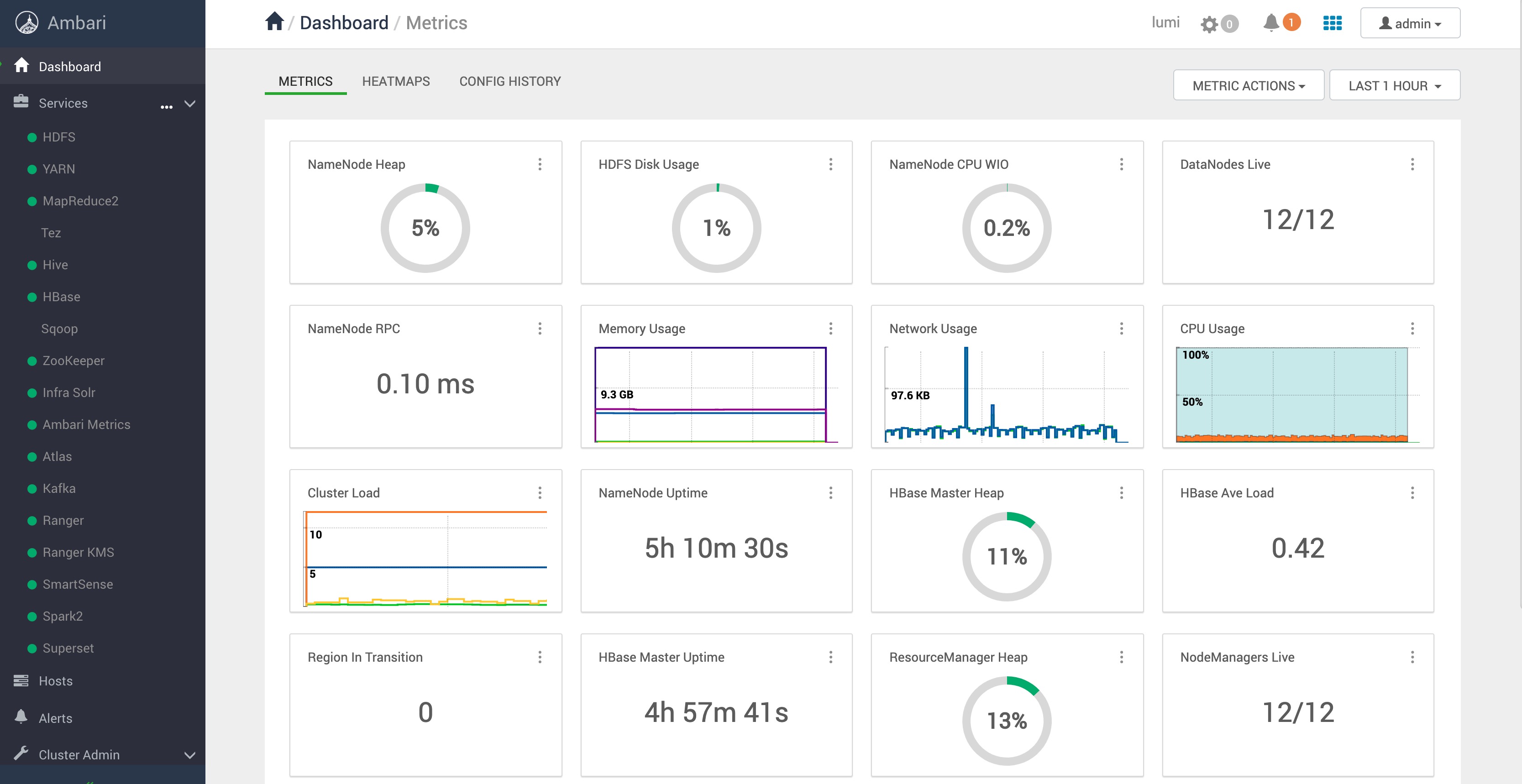

访问ambari-server web页面

默认端口8080,Username:admin;Password:admin;http://ip:8080

注意:ip为主节点master的ip

安装hive组件必须执行如下: ambari-server setup --jdbc-db=mysql --jdbc-driver=/usr/share/java/mysql-connector-java.jar

安装配置部署HDP集群

安装成功

重新安装Ambari 1、关闭所有组件 1.1.1.关闭所有组件 通过ambari将集群中的所用组件都关闭,如果关闭不了,直接kill-9 XXX

1.1.2.关闭ambari-server,ambari-agent ambari-server stop

1.1.3.yum删除所有Ambari组件 sudo yum remove -y hadoop_3* ranger* zookeeper* atlas-metadata* ambari* spark* slide* hive* oozie* pig* tez* hbase* knox* storm* accumulo* falcon* ambari* smartsense*

1.1.4.删除各种文件 特别注意:这里删除的时候,一定要慎重检查

ambari安装hadoop集群会创建一些用户,清除集群时有必要清除这些用户,并删除对应的文件夹。这样做可以避免集群运行时出现的文件访问权限错误的问题。总之,Ambari自己创建的东西全部删完,不然的话重新安装的时候会报各种“找不到文件”的错误。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 sudo userdel oozielog /hadoop*log /hive*log /ambari-*log /hbaselog /sqoop

1.1.5.清理数据库 删除mysql中的ambari库

1.1.6.重装ambari 通过以上清理后,重新安装ambari和hadoop集群(包括HDFS,YARN+MapReduce2,Zookeeper,AmbariMetrics,Spark)成功。

遇到的问题 1、重装时 hostname没有配置 vi/etc/ ambari-agent/conf/ ambari-agent.ini

2、yum的cache缓存没有清除 3、文件没删除干净